Development of a Self-Modulating Model for a Robotic Embodied System

Project Period: 2024-2028

Abstract

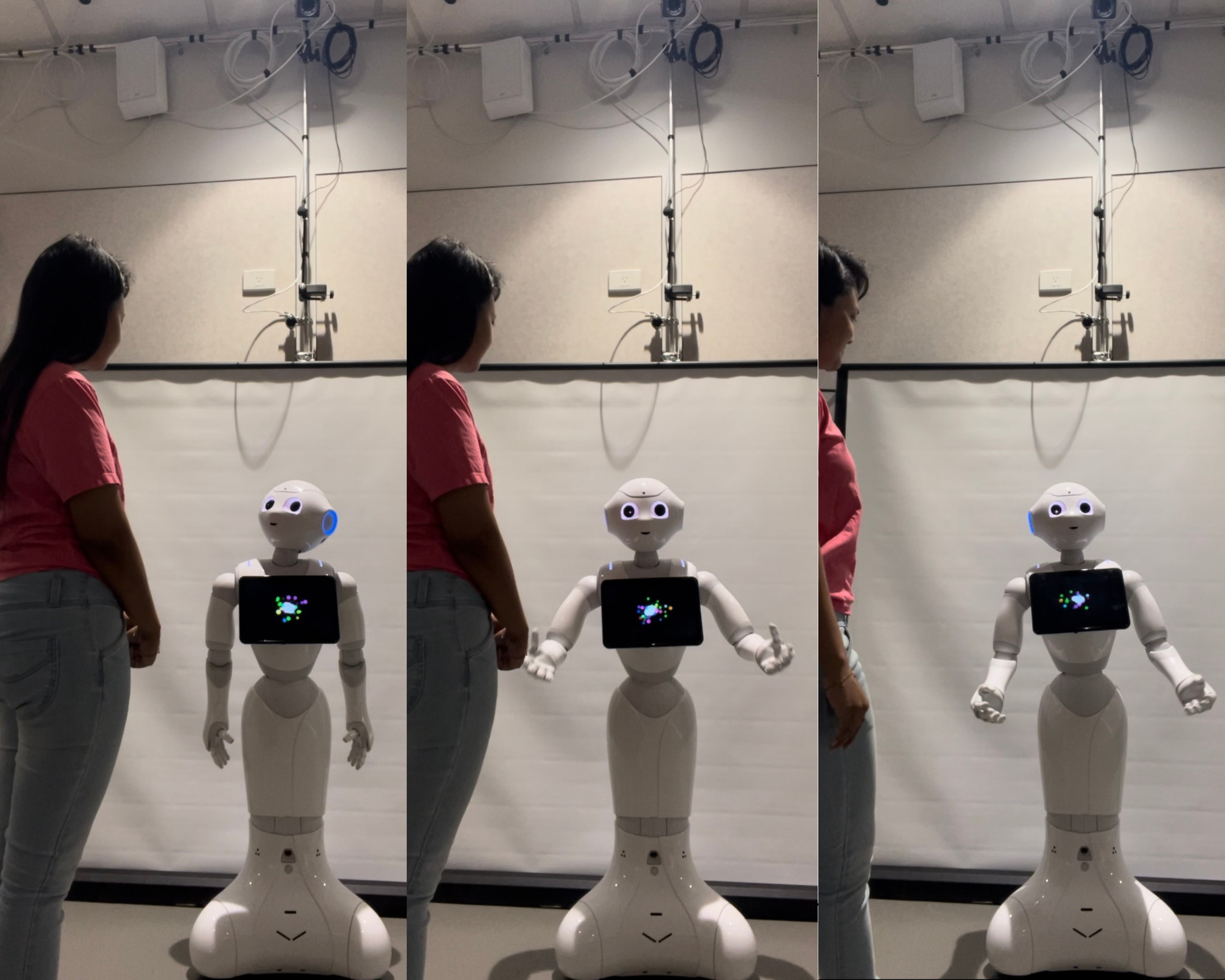

Human beings possess a unique and highly evolved capacity to dynamically regulate their level of social presence in response to environmental cues, social norms, and contextual expectations—a capability that plays a crucial role in shaping Human-Robot Interaction (HRI). Yet, its modulation remains an underexplored area in embodied robotic systems. This research advances the field by systematically redefining social presence within HRI and developing a novel framework for its dynamic modulation. The proposed framework introduces a structured approach to adapting robotic presence in response to changing contextual and social cues.

A key contribution of this study is the real-time implementation of a generic, multi-modal robotic presence modulation system. This system incorporates context identification, enabling robots to autonomously recognize and respond to diverse social and environmental scenarios. Additionally, a reinforcement learning-based framework is developed to allow robots to fine-tune their presence expressions by leveraging user feedback and contextual awareness. By integrating multi-modal data sources such as gaze tracking, speech sentiment analysis, and environmental stimuli, this research demonstrates how antecedents of social presence—including attention and user engagement—can be effectively utilized for adaptive robot behavior.

To evaluate the impact of presence modulation in real-world settings, this study proposes an in-the-wild case study where an embodied robot will be deployed across distinct interaction contexts: a social scenario, a disengaged scenario, and an alarmed/emergency scenario. This evaluation aims to assess how adaptive presence influences user perception, engagement, and overall interaction quality. Furthermore, the study explores potential real-world applications, particularly in healthcare settings, where a care robot could autonomously regulate its presence based on situational demands.

By bridging the gap between theoretical understanding and practical implementation, this research provides a foundational framework for adaptive social presence in HRI, offering new insights into how robotic systems can modulate their presence effectively to enhance human-robot interactions.

Study Period: 2024-2028

Team Members: Damith Herath; Janie Busby Grant; Maleen Jayasuriya; Nipuni Wijesinghe

Publication

N. H. Wijesinghe, Y. Peng, S. Konrad, M. Jayasuriya, J. B. Grant, and D. Herath, “Reframing Social Presence for Human Robot Interaction,” in Proceedings of the 2025 ACM/IEEE International Conference on Human-Robot Interaction, 2025, pp. 1716–1721. doi: 10.5555/3721488.3721751.

Damith Herath

Founder/Lead Collaborative Robotics Lab

Professor Damith Herath is the Founder and leader of the Collaborative Robotics Lab (CRL). His work focuses on exploring how robots and humans can collaborate, with an emphasis on the intersection of engineering, psychology, and the arts. His interdisciplinary approach actively seeks insights across diverse fields, promoting innovation and collaboration that transcends traditional disciplinary boundaries.

Janie Busby Grant

Psychology Research Lead

Associate Professor Janie Busby Grant is the Psychology Research Lead at CRL, where her work integrates human-robot interaction and artificial intelligence with a foundation in cognitive psychology and research design. With a strong emphasis on practical applications, Janie brings a reconciliatory approach to interdisciplinary research, particularly in exploring factors influencing human perception of, and engagement with robotic systems.

Maleen Jayasuriya

Staff

Maleen Jayasuriya is a Lecturer in Robotics at the University of Canberra’s Faculty of Science and Engineering with a research focus on human-robot interaction and explainable AI (XAI) in robotics. Maleen holds a Bachelor’s degree in Electrical and Electronic Engineering from Sri Lanka and a PhD from the University of Technology Sydney, where his research focused on robot perception, localisation, and deep learning. He later completed a postdoctoral fellowship at UTS, contributing to research on collaborative robotics for sustainable construction.